In our blog post today, let’s discuss the AWS S3 major storage classes, the S3 updates released over the years, what these different storage classes are designed and optimized for, and how you can set up easy-to-configure S3 lifecycle policies to take advantage of S3 automation and get some serious discounts on cloud storage.

Amazon Simple Storage Service (affectionately (otherwise) called AWS S3) is AWS’s object-based file storage service.

It is one of Amazon’s most widely used services, commonly used for file storage, data lake, big data, log hosting, static website hosting, serverless, storing artifacts in a CI/CD pipeline and general object storage to even hosting static websites.

AWS S3 is the easiest storage option on AWS to set up and use, however, with an unbound storage space that scales automatically comes the pitfall of cost optimization.

In the majority of S3 configurations, it has been observed that data is stored in storage classes that are not optimized for the cost which results in large unnecessary bills.

AWS Pricing:

To understand S3 cost optimization, you will first need to understand the pricing structure for S3 buckets.

AWS S3 pricing consists of the following main components.

Capacity: The main component of your S3 costs. This is the size of all documents, objects, and files in your AWS S3 bucket

Requests: In addition to the size of the S3 bucket, you are charged on all API calls made to the S3 bucket. These consists of PUT, COPY, POST, LIST, GET, SELECT, Lifecycle Transition, and Data Retrievals. Delete and Cancel requests are free of cost. For Glacier, you are only charged for UPLOAD API Requests while LISTVAULTS, GETJOBOUTPUT, DELETE†, and all other Requests are free. Be advised that Glacier employs a 90-day minimum storage requirement (meaning any object that is deleted prior to 90 days incurs an additional charge)

Data Transfer: You are charged for data transfer from S3 to the internet or another Region.

Thus, for cost optimization, it’s imperative that resources that utilize or consume AWS S3 be hosted in the same region as the S3 bucket.

Now that we have the pricing out of the way, let’s discuss the various options in terms of S3 storage classes that AWS provides.

S3 Standard – is the most commonly used and configured S3 storage option. Provides quick and easy access with milliseconds of data retrieval times. However, in terms of cost, it is the most expensive storage option.

S3 Intelligent-Tiering – if you routinely work with files that are more than 128KB, S3 intelligent Tiering is the perfect instance class for you. This storage class automatically classifies your objects into the most optimized storage class depending on the usage and access patterns. Depending on how frequently files are checked out, S3 will automatically move them between infrequent access and AWS Glacier (which is the S3 long-term archive solution).

S3 Infrequent Access – for files and objects that are not requested or accessed frequently you can cut storage costs by moving them to S3 infrequent Access. The catch, however, is the retrieval costs, while still only offering millisecond access times, the costs are almost double compared to standard S3.

S3 One-zone Infrequent Access – Provides a flat 20% storage discount at the cost of redundancy, NOT ideal for any storage requirements that need reliability such as critical database backups, key logs, and documents, you would probably not be using this storage class.

However, this is a particularly useful storage option for low utilization storage content that can be rebuilt in case of data loss.

AWS S3 Glacier – AWS standard archival storage option, Glacier provides a resilient storage option for a fraction of the cost of S3 Standard (0.023$ Per GB for S3 standard vs 0.0036$ Per GB for Glacier, a 145% percent cost difference). The catch? Data in the S3 Glacier class requires you to settle for a retrieval time of anywhere between one to 12 hours. The storage class is perfect for objects that need to be retrieved occasionally but still need to be stored securely and safely.

AWS S3 Deep Glacier – Providing even more storage discounts over S3 Glacier, Deep glacier provides even lower storage costs (0.00099$ per GB), and there is a retrieval time requirement of around 12 hours for object restoration. Due to the extremely low cost, this storage class is perfect for storing compliance data that is required to be stored reliably and securely for a long time yet only needs to be accessed once or twice a year.

Understanding different Storage options are important for cost optimization and utility, but the real key to cost savings is setting up LifeCycle Policies so that the data inside the S3 buckets move from one storage type to a cheaper version based on business logic or access patterns.

There are two kinds of lifecycle policies, Transitional and Expiration policies. As the name suggests, Transitional policies move objects from one storage class to another based on conditions where expiration policies are responsible for deleting the objects if the criteria are fulfilled.

Let’s take a real-life example. Suppose your organization handles claims for medical procedures and processes receipts which are stored in S3. Each claim has a validity of 30 days to active claims, until the end of the quarter for accounting and finance, and needs to be stored for a year for compliance purposes after which they can be deleted.

To cost optimize the above scenario, you will need an S3 bucket with the following lifecycle policies.

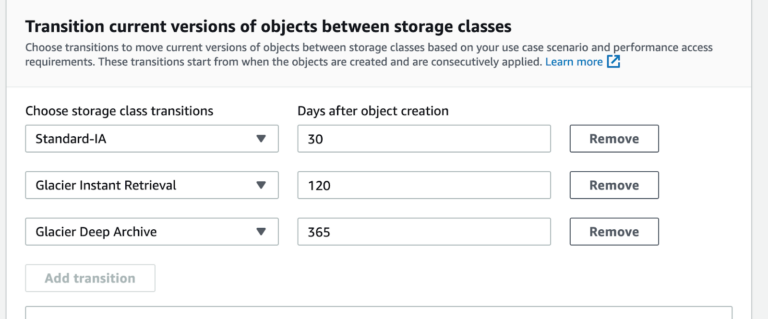

- Move objects to Infrequent Access after 30 days

- Move objects from infrequent Access to S3 glacier after 120 days (end of each quarter)

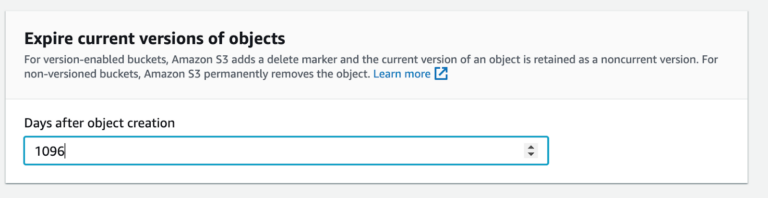

- Delete objects from S3 Glacier after one year

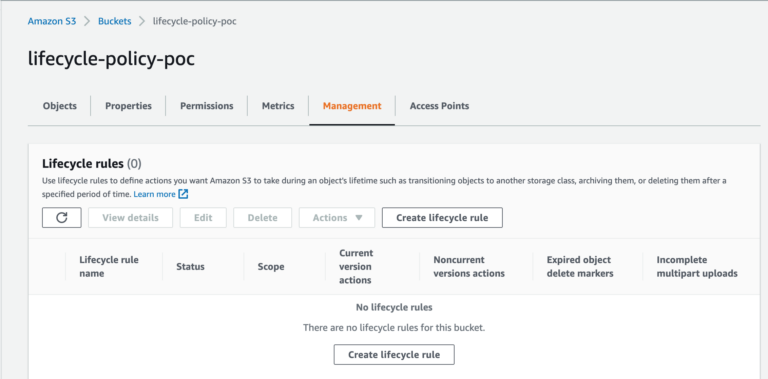

To do that, go to the S3 console and click on the management Tab

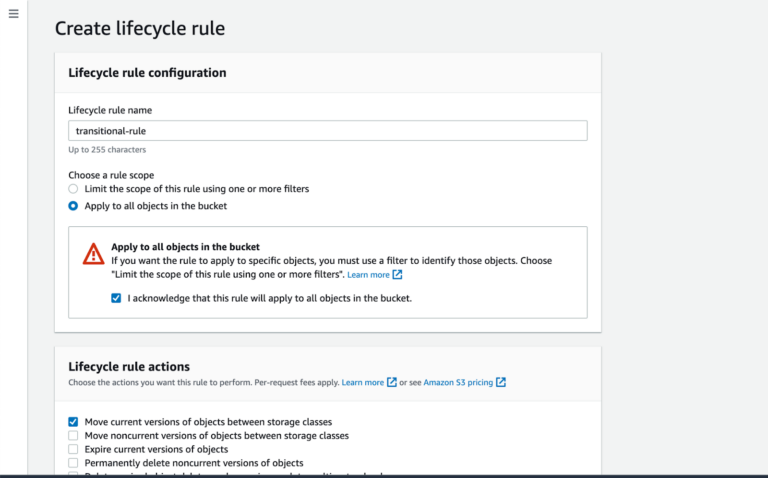

You can apply the rules to a prefix or all objects in a bucket. There are options to apply the rule to different object versions as well as only current options.

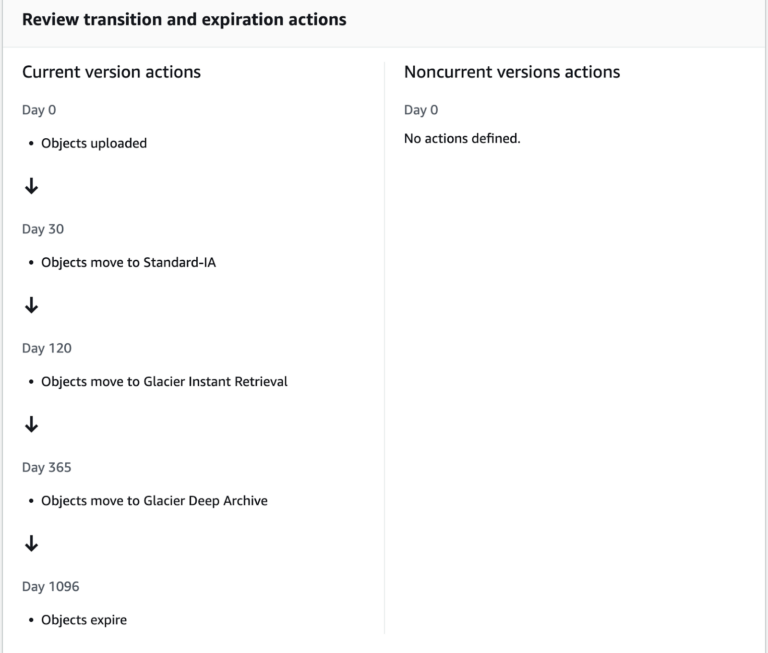

You can set up multiple transition phases in a single lifecycle policy. So, to fit our previous scenario discussed before, we have set up 3 phases.

If you select the option to permanently delete object versions, you will get an additional configuration panel that allows you to delete object phases as well as shown below.

When you are done adding policies, there will be a breakdown that shows you what the phases will look like.

The key to cost optimization with AWS S3 is understanding and utilizing the various S3 instance classes based on your use cases. Adding lifecycle policies that automatically move data from the most expensive storage to the cheapest will dramatically reduce your AWS Spending and begin the path to being a cost-optimized AWS Well-Architected environment.